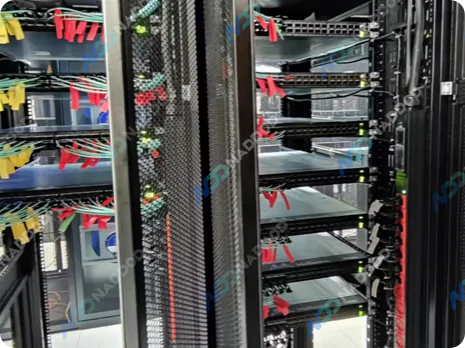

NDR Multimode Transceivers Deployment

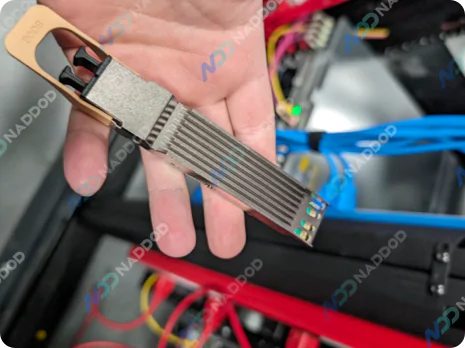

InfiniBand NDR multimode transceivers cost-effective, reliable performance for short distances.

Adaptive routing, in-network computing, congestion control architecture, empower InfiniBand to meet the rigorous demands of HPC and AI clusters. These optimizations ensure seamless data flow, eliminate bottlenecks, and enable efficient resource utilization, driving superior performance and operational efficiency for complex infrastructures.

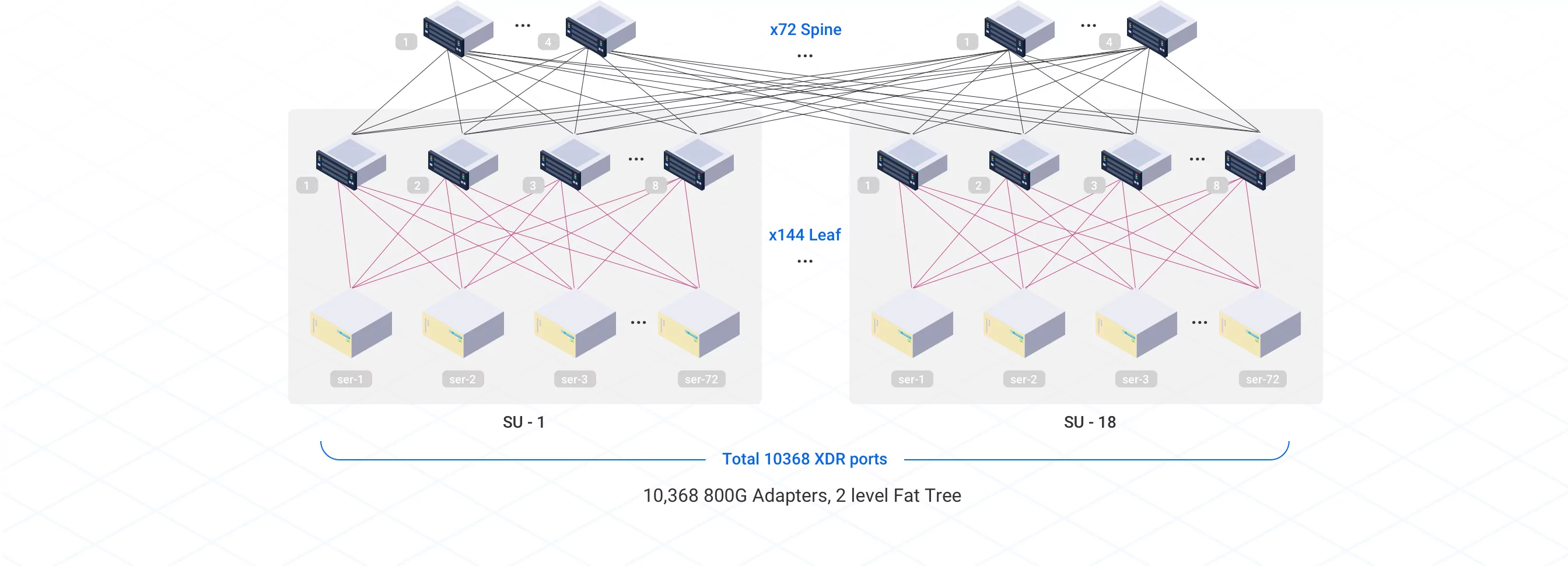

For large-scale InfiniBand AI cluster deployment, with NVIDIA H100, H200 GPUs, the DGX solutions (GB200, GB300), fat-tree network topology are paired with these suited for handling intensive AI workloads.

Flexible solutions tailored to varying AI cluster sizes, data center layouts, and connection distances.

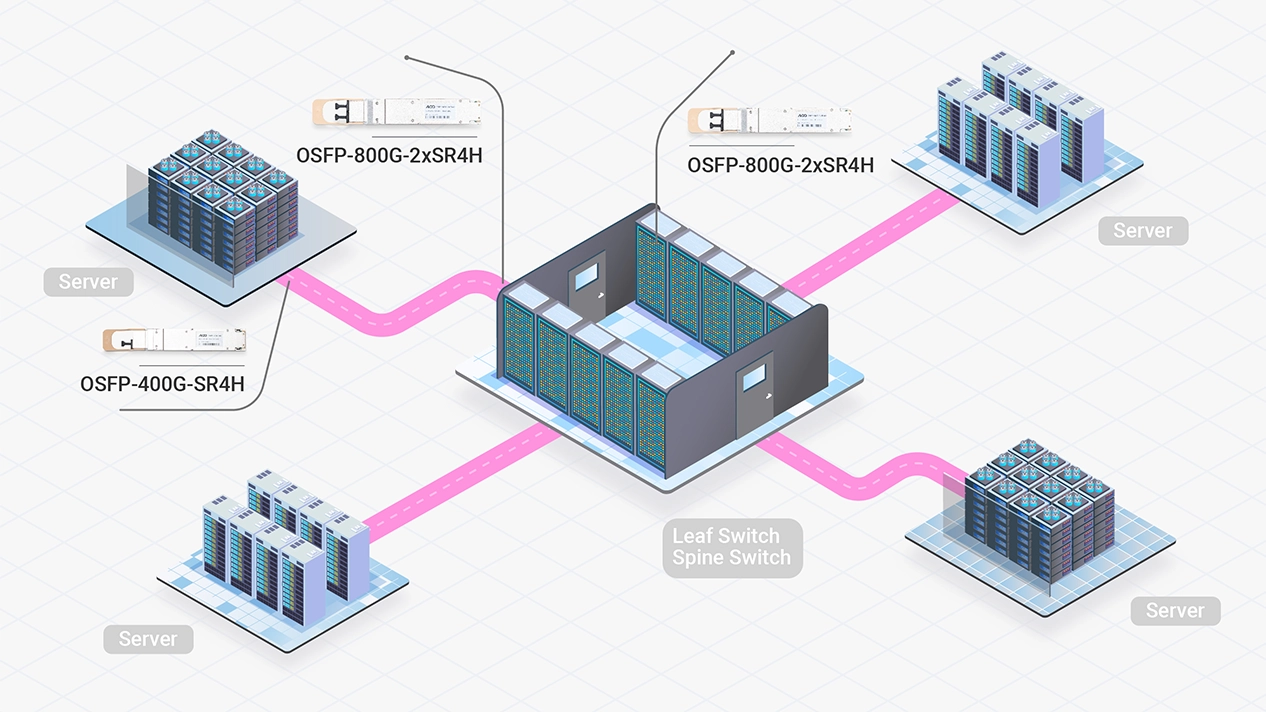

High-performance InfiniBand network deployment designed for compact AI data centers

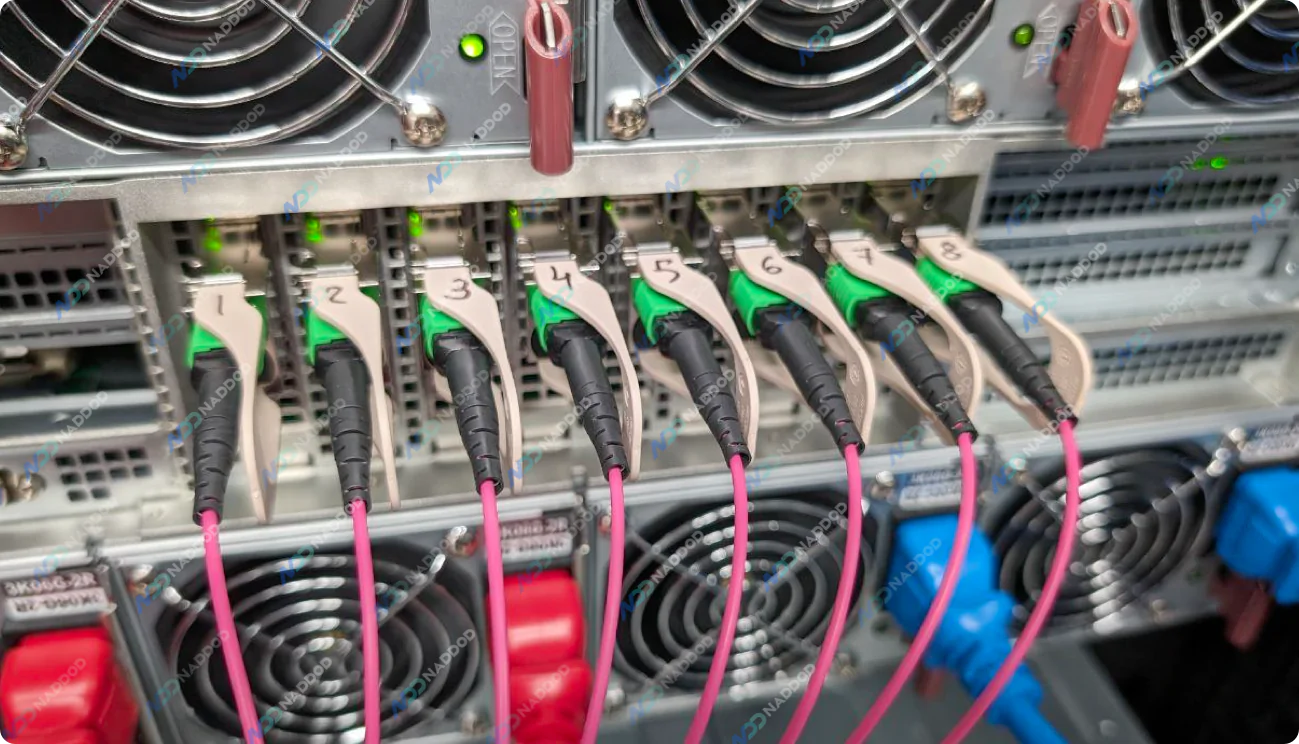

InfiniBand NDR multimode transceivers cost-effective, reliable performance for short distances.

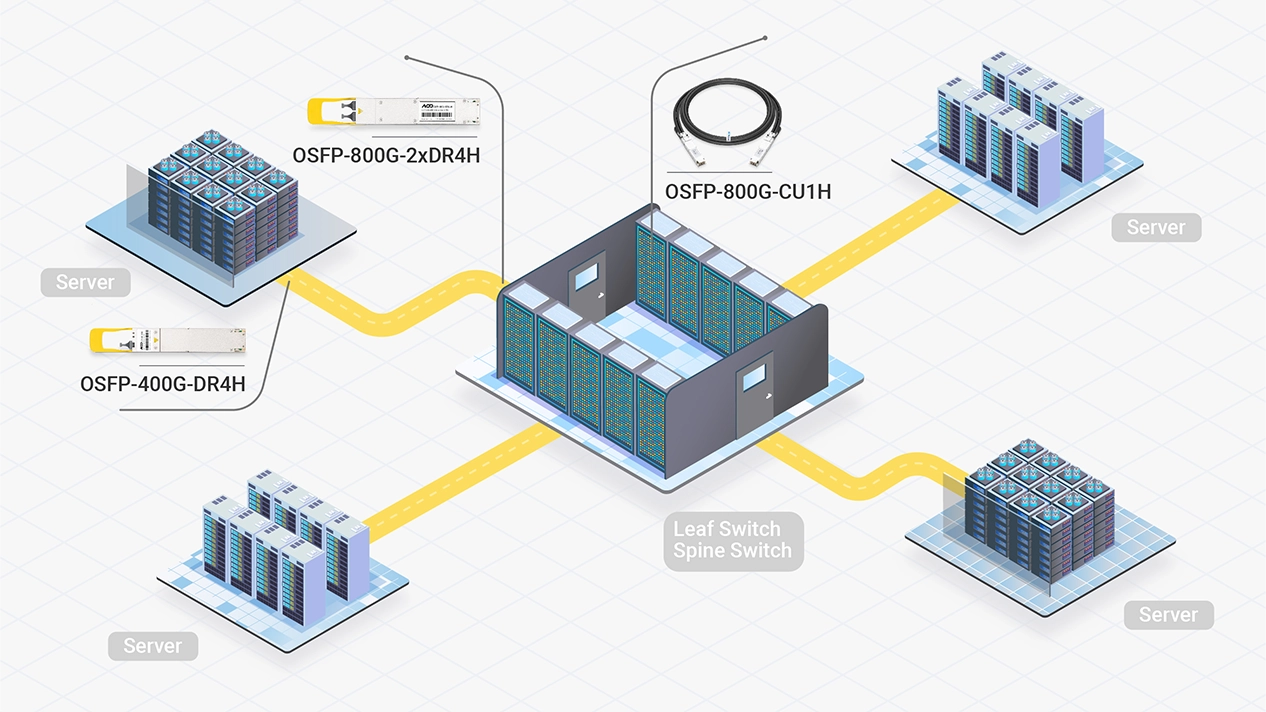

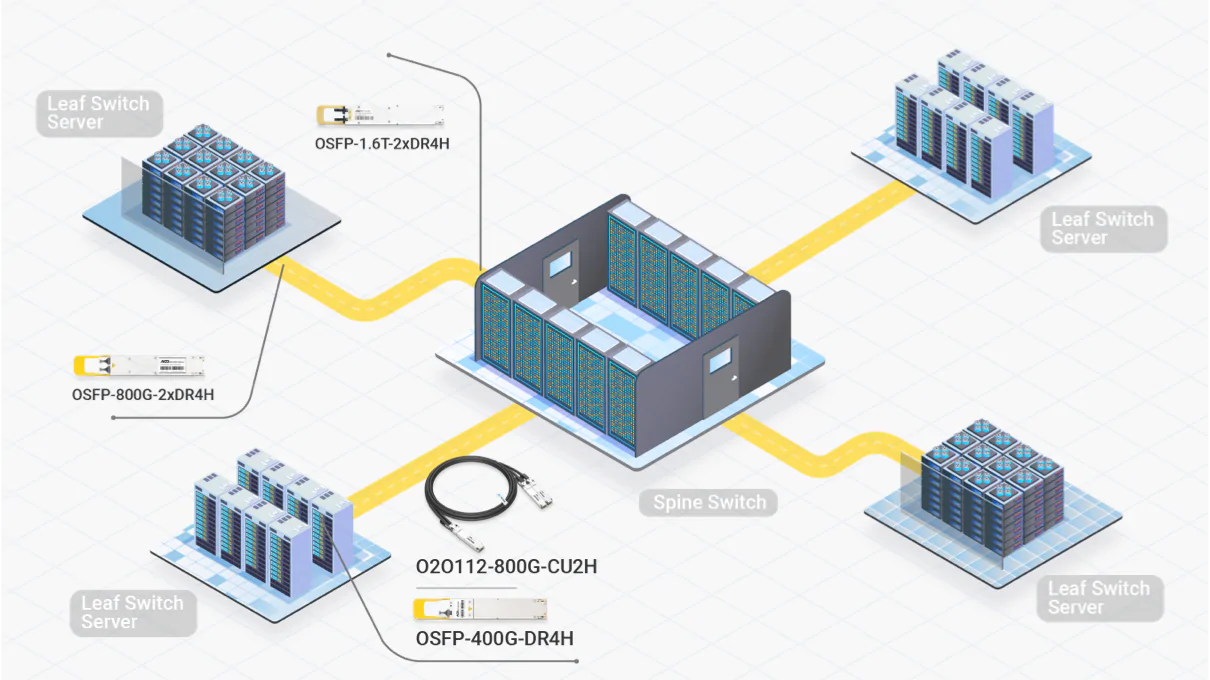

High performance and cost-effective way to upgrade or build more scalable AI clusters.

InfiniBand NDR Single-mode transceivers enable stable, long-distance connections, while DAC cables lower costs and power consumption. Together, they provide an efficient solution for mid-to-large clusters.

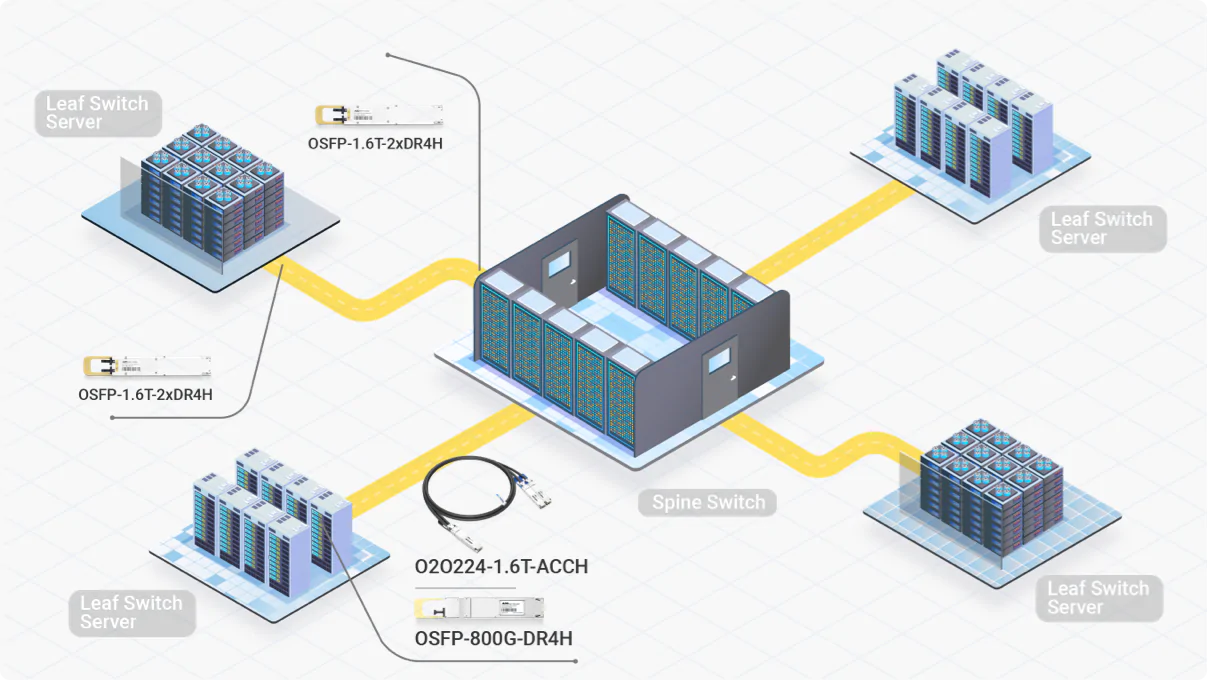

Meeting the extreme bandwidth and latency demands of trillion-parameter ultra-large model.

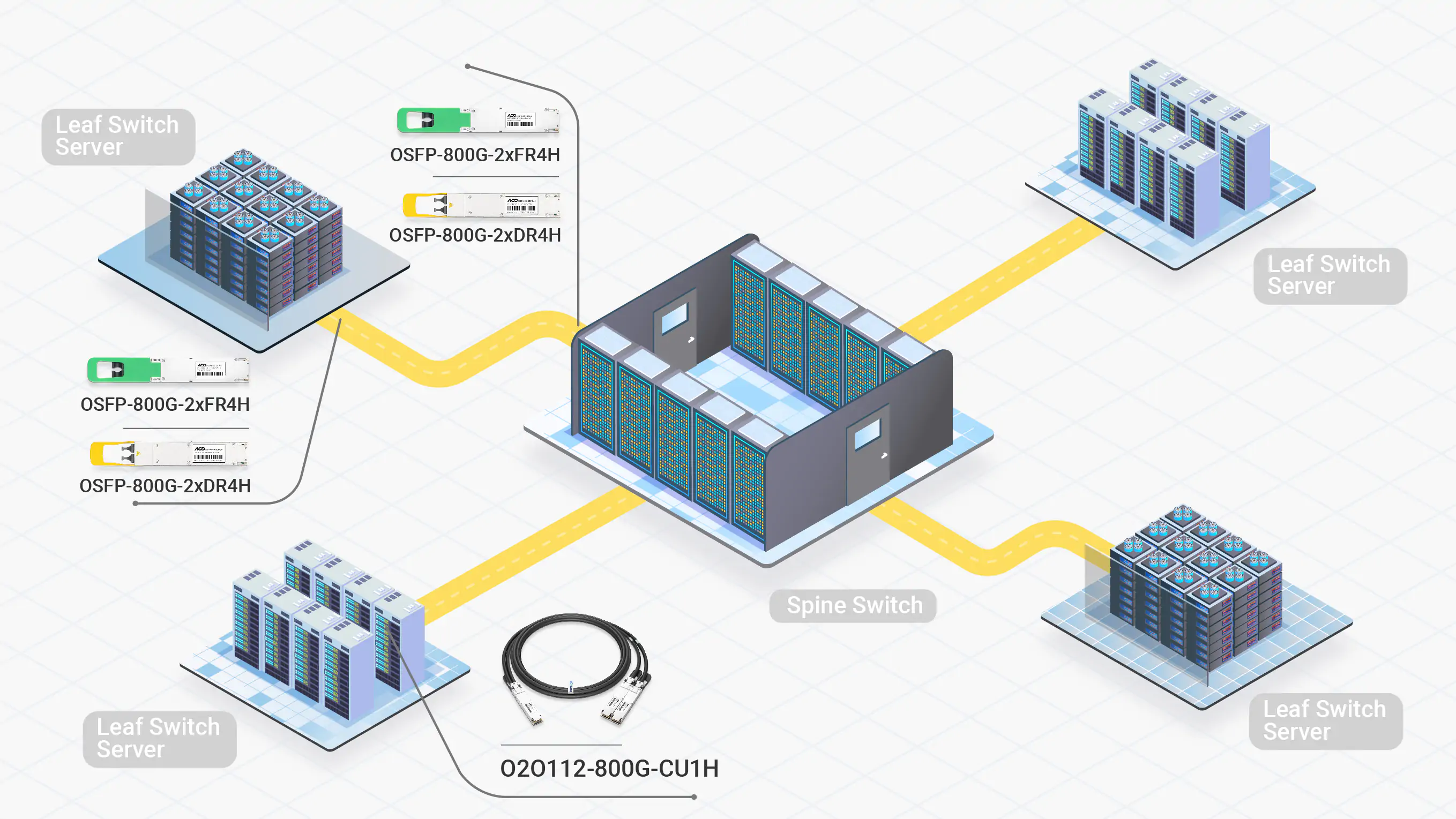

For large AI clusters, a hybrid approach combining DAC cables with XDR or NDR transceivers is commonly adopted. It combines ultra-high speeds for demanding AI workloads with cost-efficient infrastructure.

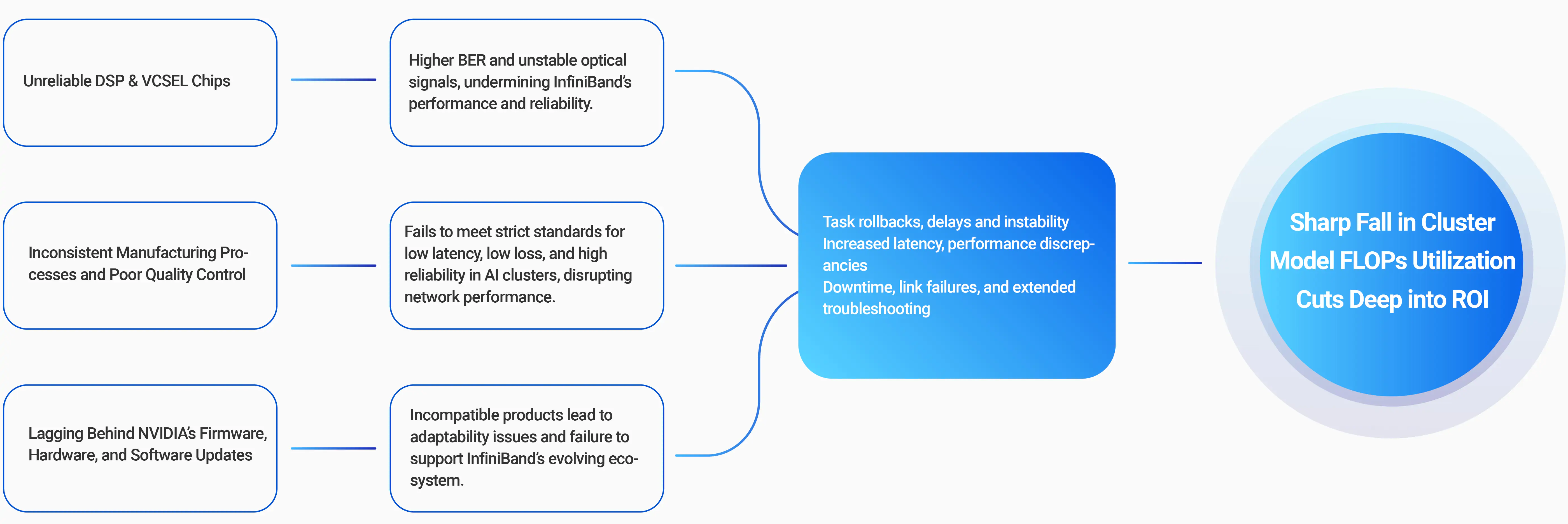

80% AI Training Interruptions Stem from Network-Side Issues

95% Network Problems Often Linked to Faulty Optical Interconnects

NVIDIA Quantum-X800 and Quantum-2 connectivity options enable flexible topologies with a variety of transceivers, MPO connectors, ACC, and DACs. Backward compatibility connects 800b/s, 400Gb/s clusters to existing 200Gb/s or 100Gb/s infrastructures, ensuring seamless scalability and integration.

InfiniBand XDR Optics and CablesThe NVIDIA Quantum‑X800 and Quantum‑2 are designed to meet the demands of high-performance AI and HPC networks, delivering 800 Gb/s and 400 Gb/s per port, respectively, and are available in air-cooled or liquid-cooled configurations to suit diverse data center needs.

Quantum-X800 SwitchesThe NVIDIA ConnectX-8 and ConnectX-7 InfiniBand adapter delivers unmatched performance for AI and HPC workloads, offeringsingle or dual network ports with speeds of up to 800Gb/s, available in multiple form factors to meet diverse deployment needs.

ConnectX-8 Adapters

Partner with NADDOD to Accelerate Your InfiniBand Network for Next-Gen AI Innovation