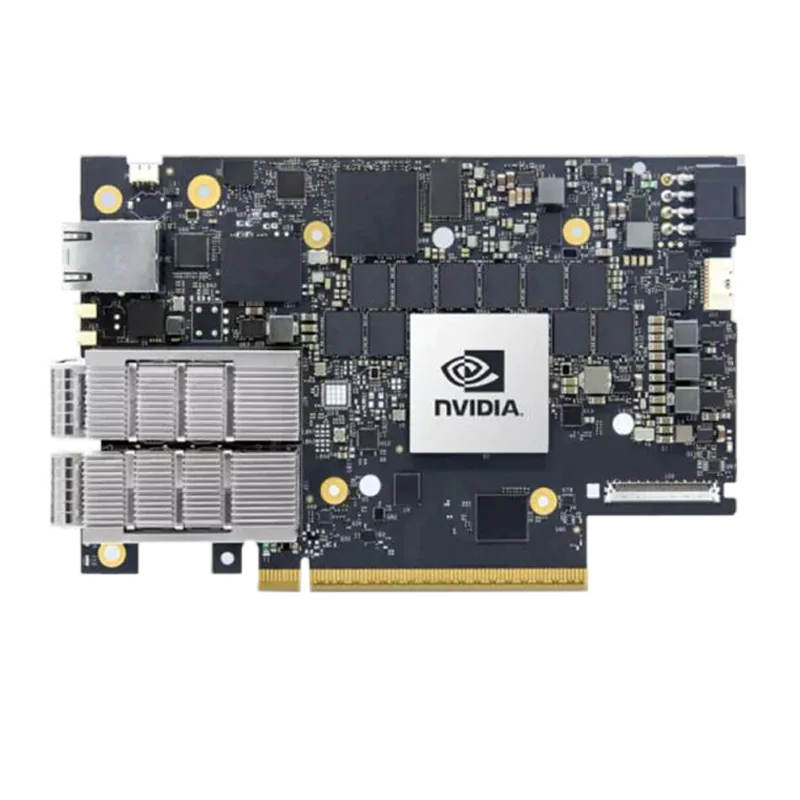

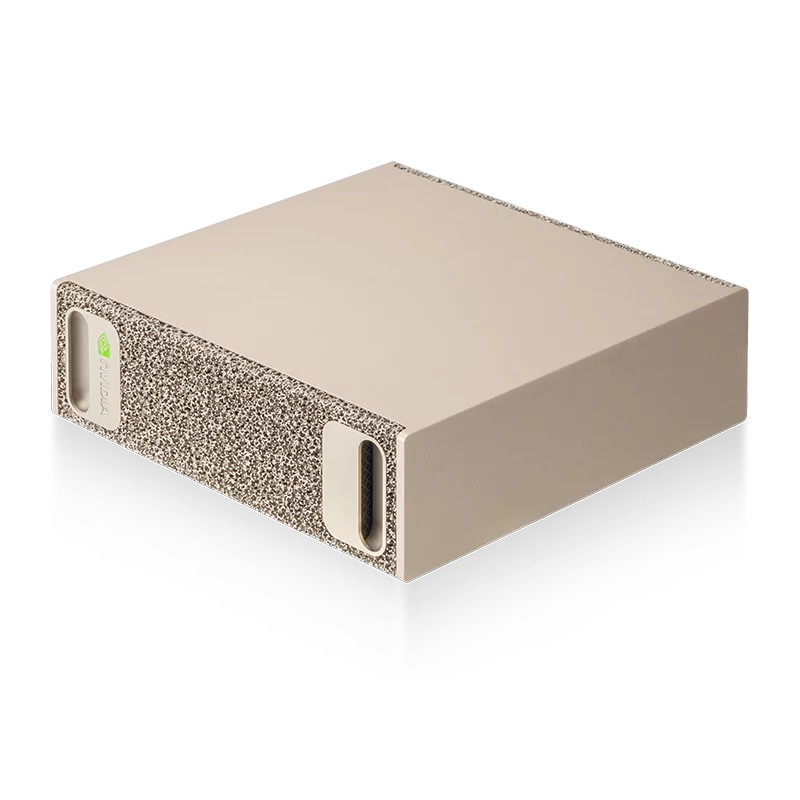

NADDOD N9570-128QC: 51.2T 128×400G Switch Powered by NVIDIA Spectrum-4 Chip

As AI training clusters scale, network latency, stability, and link utilization become critical. Learn how the NADDOD N9570-128QC leverages Spectrum-4 and ConnectX-8 to address RoCE performance challenges in large GPU environments.

Neo

NeoJan 27, 2026