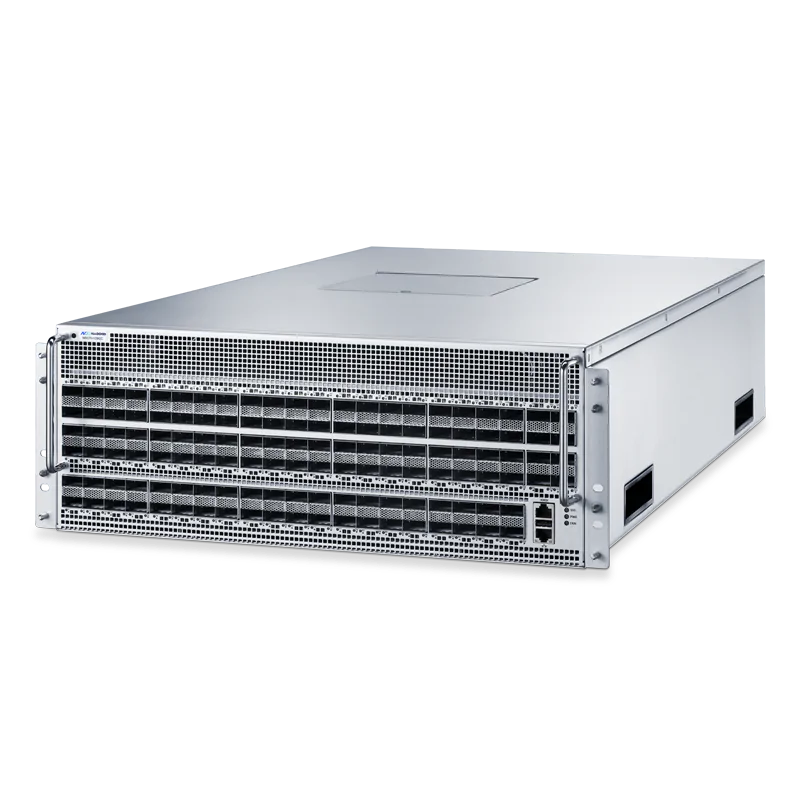

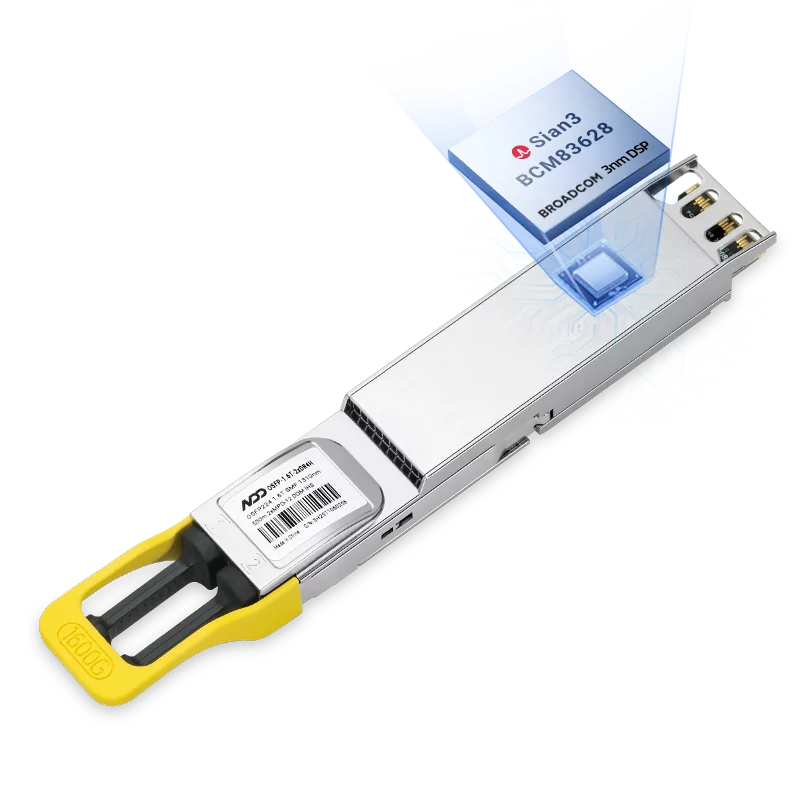

1.6T/800G XDR Hot

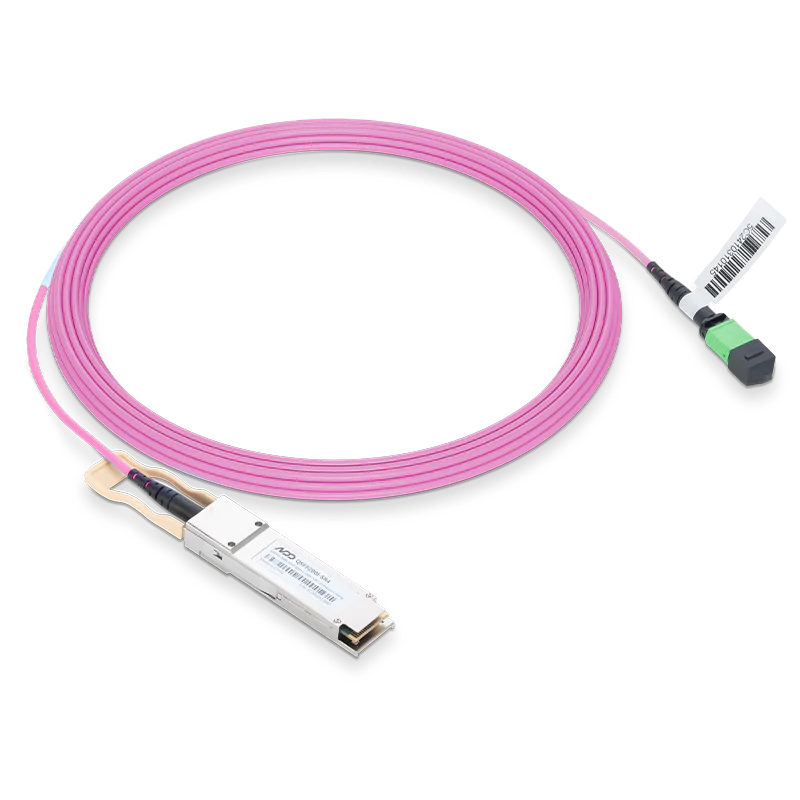

200G QSFP56 to QSFP56 AOC In Stock

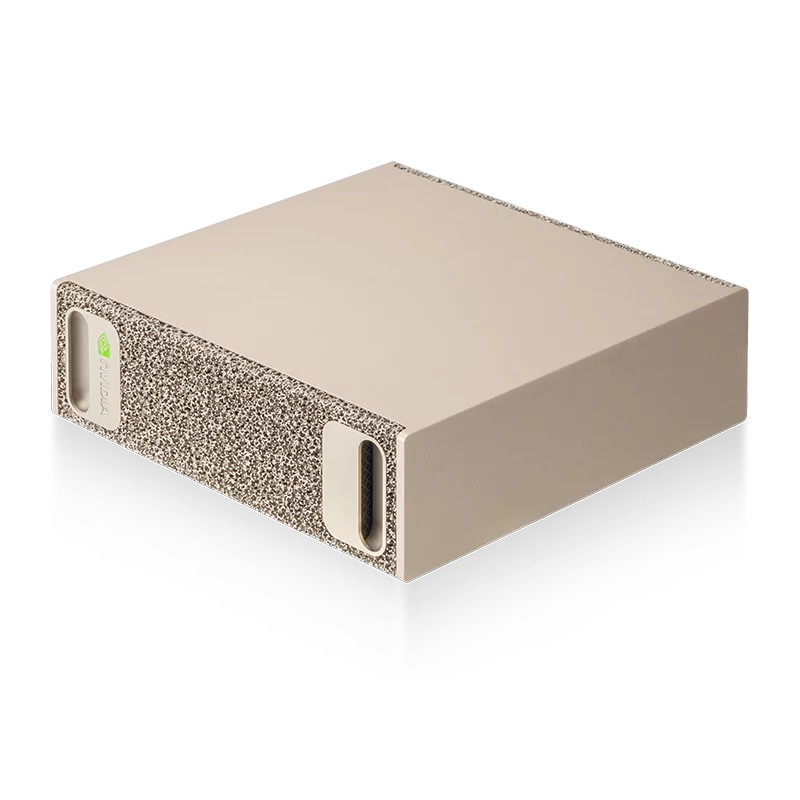

1.6T OSFP224 Hot

1.6T OSFP224 DAC New

400G OSFP DAC New

200G QSFP56 DAC Hot

100G/56G/40G QSFP DAC On Sale

25G/10G SFP DAC On Sale

100G Breakout DAC On Sale

1.6T OSFP224 ACC New