Find the best fit for your network needs

share:

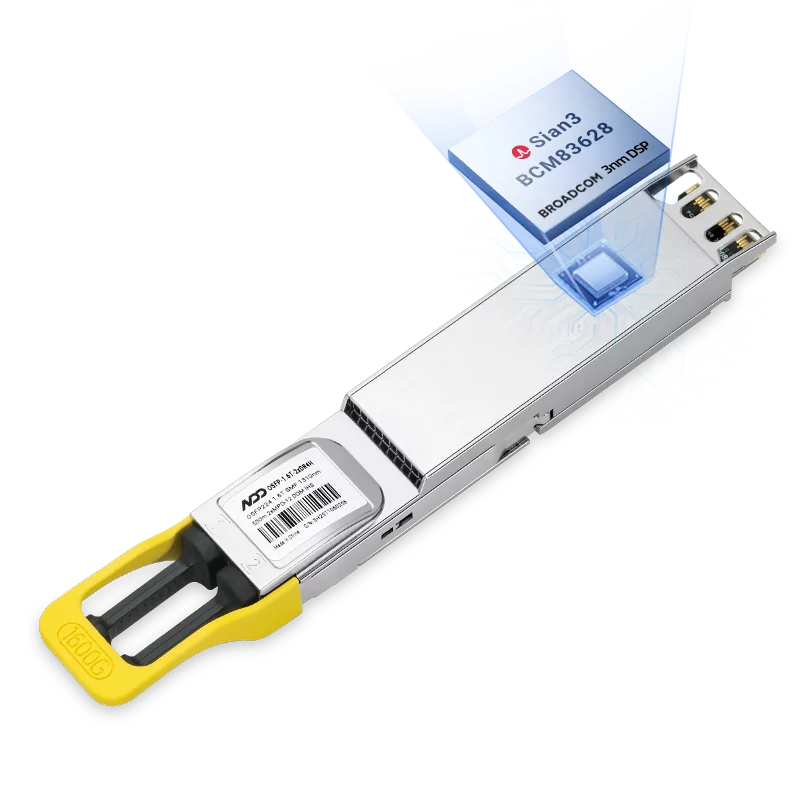

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF ModuleLearn More

Popular

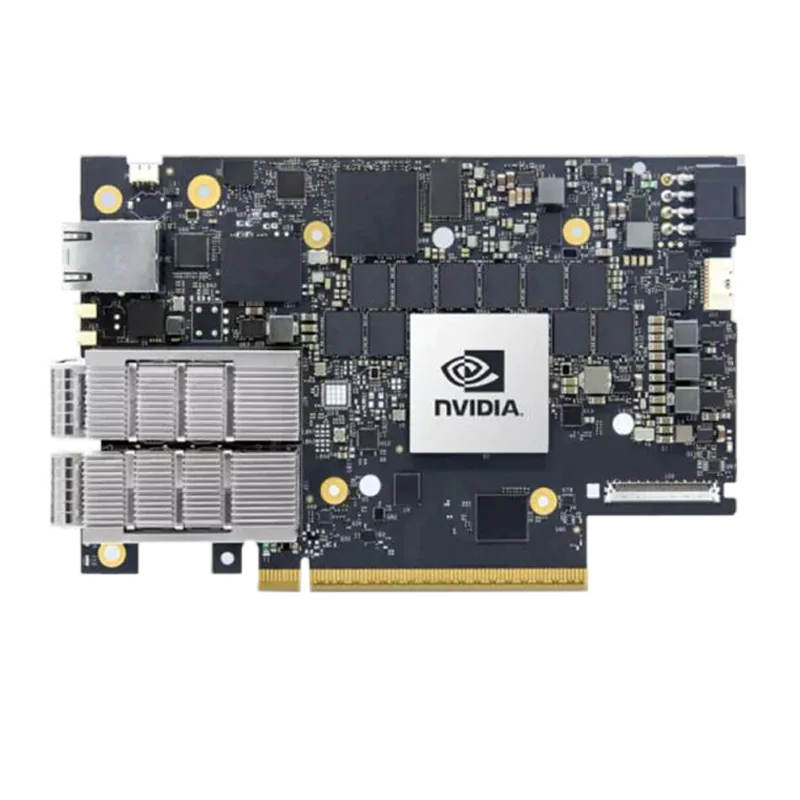

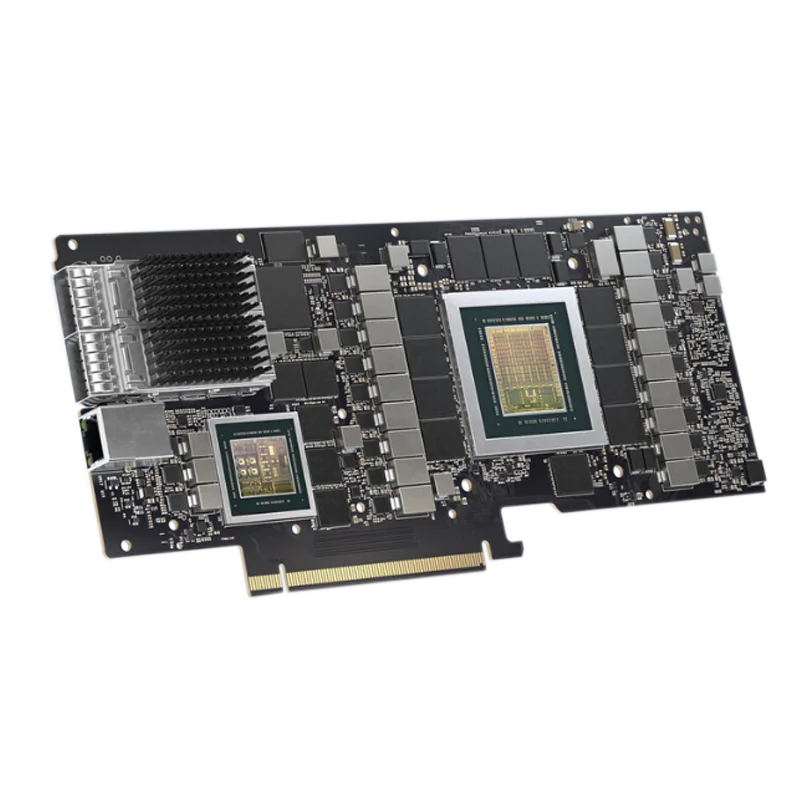

- 1Quick Understanding GPU Server Network Card Configuration in AI Era

- 2Optimizing AI GPU Clusters Network and Scale

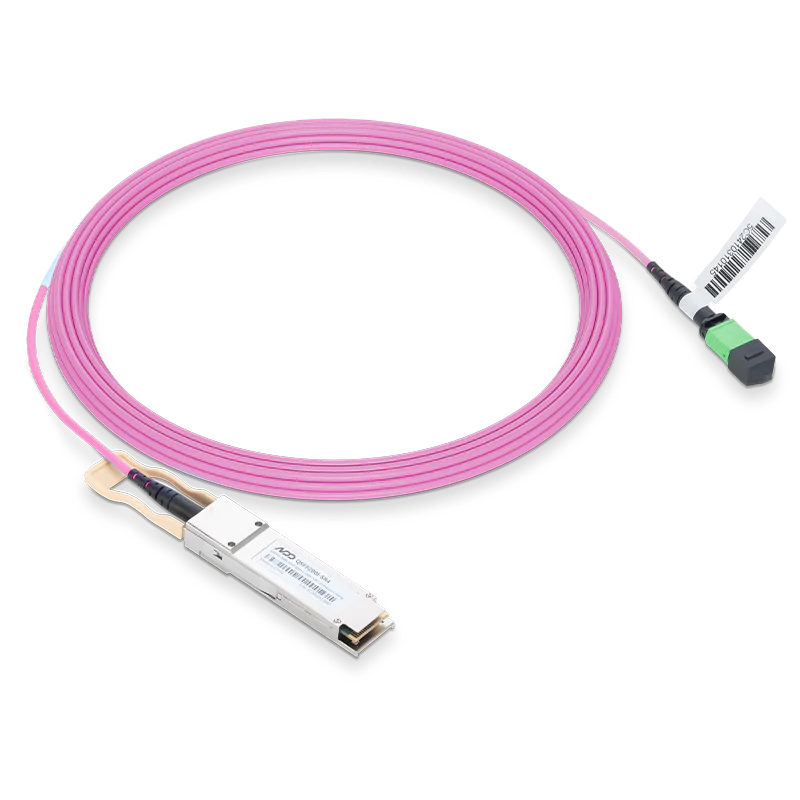

- 3Overcoming Data Center’s Three Key Challenges with 800G Optical Transceivers

- 4The Key Role of High-quality Optical Transceivers in AI Networks

- 5Common Problems While Using Optical Transceivers in AI Clusters