Back to

Driving the Data Center Network Revolution:The Perfect Combination of 800G Optical Modules and NDR Switches

Find the best fit for your network needs

share:

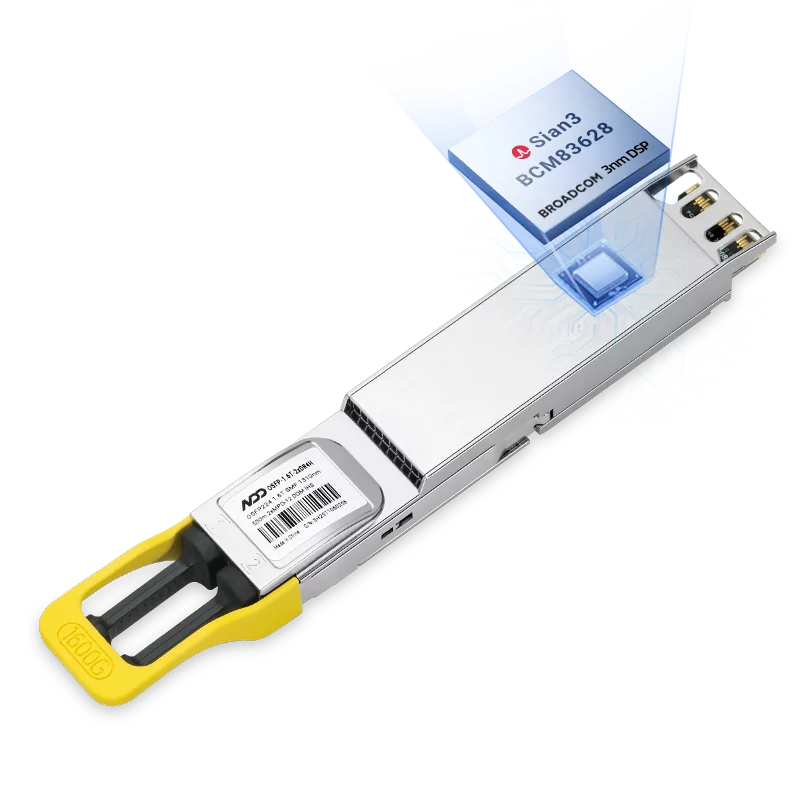

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF ModuleLearn More

Popular

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF ModuleLet's Chat

Shop now