1.6T/800G XDR New

800G/400G NDR Hot

800G IB OSFP トランシーバー In Stock

400G IB OSFP/QSFP112 トランシーバー In Stock

400G OSFP 対 2x200G QSFP56 AOC スプリッタ In Stock

1.6T OSFP224 New

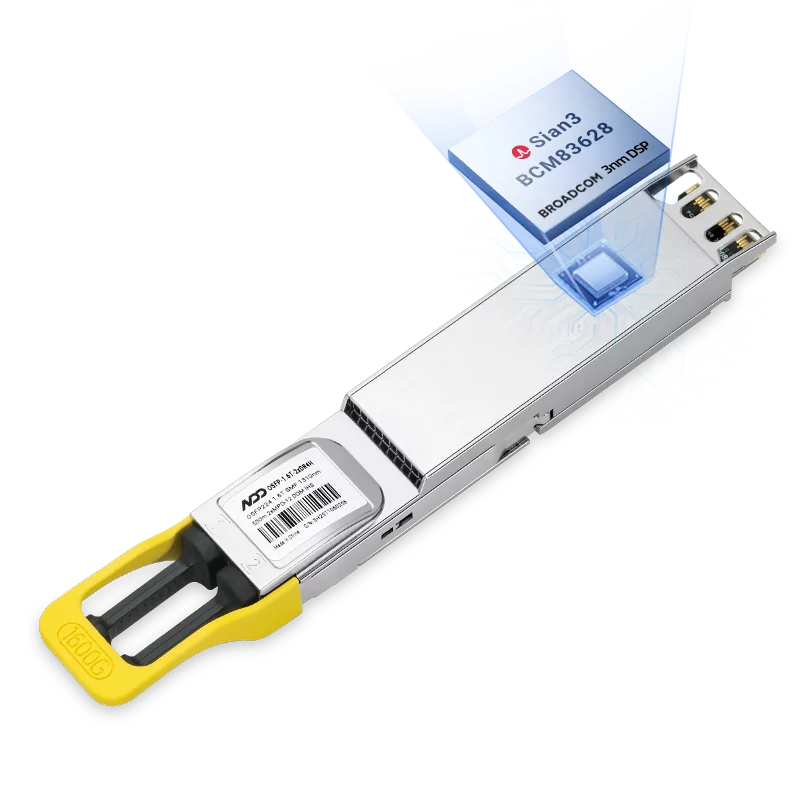

NADDODの1.6T OSFP224 InfiniBand XDR 光トランシーバーは、単一モードで500mおよび2KMの長距離安定伝送を提供し、効率的な人工知能トレーニング、HPC、およびデータセンター向けに特別に設計されています。これらの1.6T OSFP224 オプティカル トランシーバーは、超低ビットエラー率、低レイテンシ、コスト効率の高い性能のために厳格にテストされており、NVIDIA XDRシステムとの優れた相互運用性を提供します。

NADDODの1.6T OSFP DACケーブル OSFP224-OSFP224は、高性能InfiniBand XDRネットワーク向けに設計されており、最大1.6Tbpsのデータレートをサポートします。これらの1.6T OSFP DAC cable は、OSFP224ポート間で超低レイテンシかつ低消費電力の接続性を提供します。主に1.6T OSFPスイッチやConnectX-8 SuperNICネットワークカード間の直接接続に使用され、NVIDIA Quantum-X800 Q3400-RAスイッチとの完全な互換性があります。これらの1.6T OSFP DACケーブルは、AI、HPC、およびデータセンター環境で1.6Tスループットが必要な短距離高速リンクに最適です。

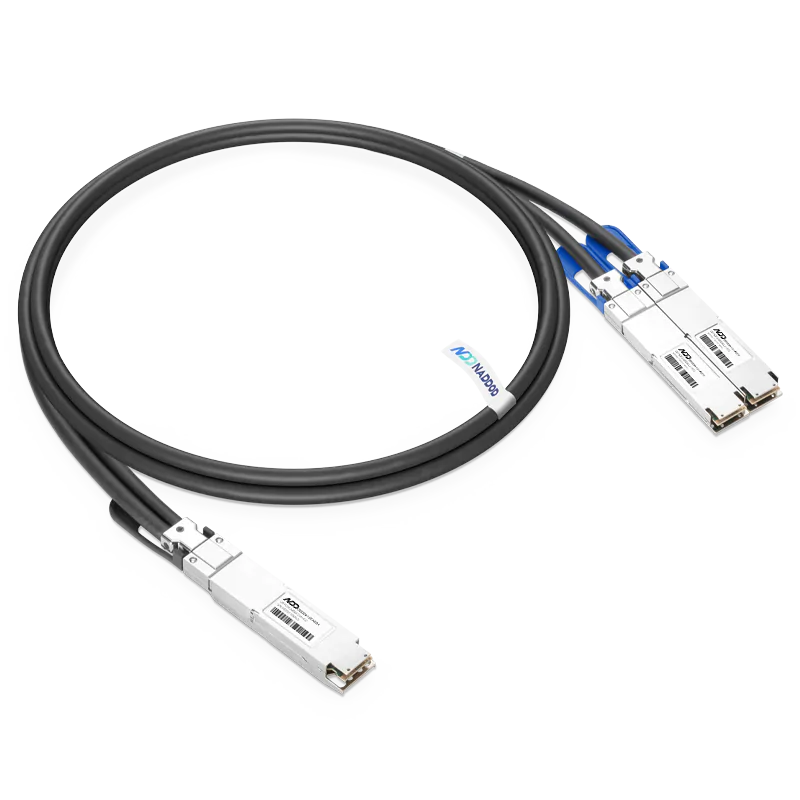

NADDODの1.6T OSFP224-2x800G OSFP224 DAC スプリッターケーブルは、InfiniBand XDRネットワーク向けに設計されており、1.6T OSFP224ポート1つから2つの800G OSFP224ポートへの高速ブレイクアウト接続を可能にします。 この1.6Tブレイクアウト DAC ブレークアウト ケーブル は主に1.6Tスイッチから800G NICへの相互接続に使用され、NVIDIA Quantum-X800 Q3400-RAスイッチおよびNVIDIA ConnectX-8 C8180 SuperNICとの完全互換性があります。 NADDODの1.6Tブレイクアウト DAC スプリッターケーブルは、AI、HPC、およびデータセンターインフラ内の高負荷ネットワーク向けに、コスト効率が高くスケーラブルなソリューションを提供します。

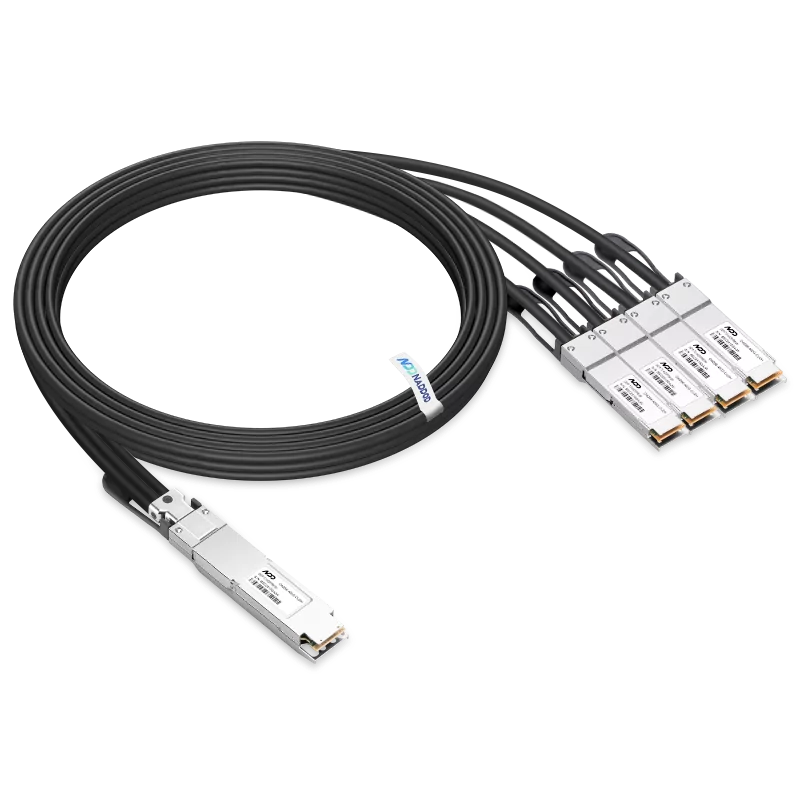

NADDODの1.6T OSFP224-4x400G OSFP224 DAC スプリッターケーブルは、InfiniBand XDRネットワーク向けに特別に設計されており、1.6T OSFP224ポート1つから4つの400G OSFP224ポートへの高速ブレイクアウト接続を提供します。主に1.6Tスイッチから400G NICへの接続に使用され、このブレイクアウト DAC ブレークアウト ケーブル NVIDIA Quantum-X800 Q3400-RAスイッチおよびNVIDIA ConnectX-8 C8180 SuperNICとのシームレスな互換性を確保します。コスト効率が高くスケーラブルな相互接続ソリューションを提供し、AI、HPC、および最新のデータセンター環境に最適です。

NADDODの800G OSFP224 InfiniBand XDR 光トランシーバーは、OSFPフォームファクターを採用しており、単一モード光ファイバーを媒体として使用します。製品ポートフォリオには、800G DR4(500m)が含まれています。本製品は、Twin-port OSFP 2x800Gb/s オプティカル トランシーバーを使用してQuantum-X800 QM3x00スイッチと、GB200ベースの液冷システム内に配置されたデュアル800Gb/s ConnectX-8メザニンカードを高性能で接続するために使用されます。

NADDODは、HPCおよびAIDCにおける高速ネットワーキングのために、低消費電力の光トランシーバーと高性能銅接続を組み合わせた、1.6T/800G InfiniBand XDRソリューションを提供します。InfiniBand XDR オプティカル トランシーバーポートフォリオには、OSFP-1.6T-2xDR4H、OSFP-1.6T-2xFR4H、OSFP-800G-DR4Hが含まれ、いずれも超低ビットエラー率、低レイテンシ、最適な即時設置体験、性能、および耐久性のために厳格にテストされています。アクティブ銅ケーブルOSFP-1.6T-AC3H、アクティブ銅ケーブルスプリッターO2O224-1.6T-ACCH、およびパッシブ銅ケーブルOSFP-1.6T-CU0-9Hは、コスト効率が高く、低レイテンシで、省電力かつ高帯域密度の接続性を提供し、密集したデータセンター環境でシームレスな1.6Tパフォーマンスを実現します。

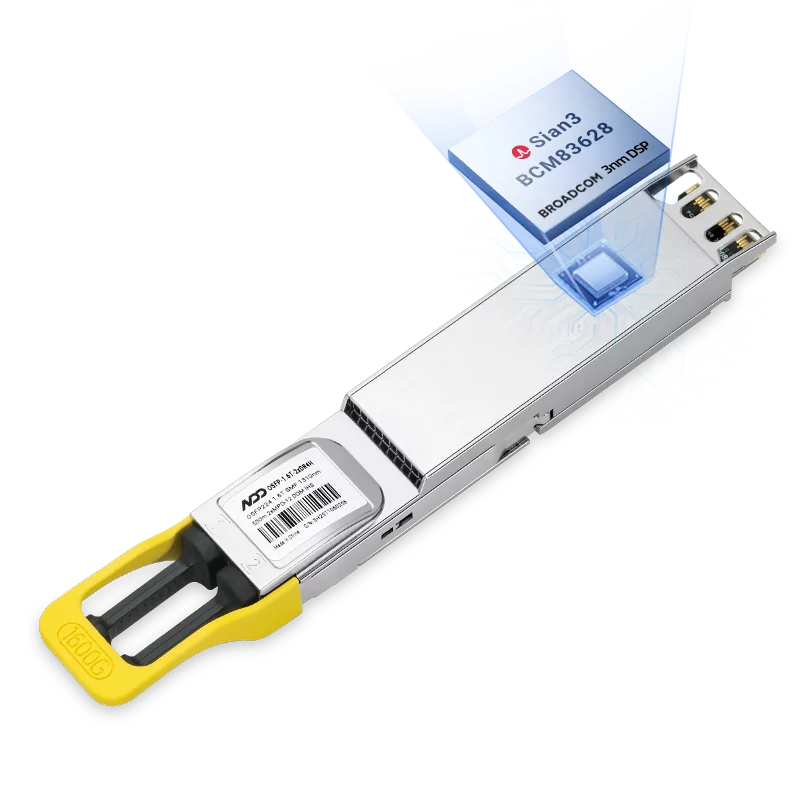

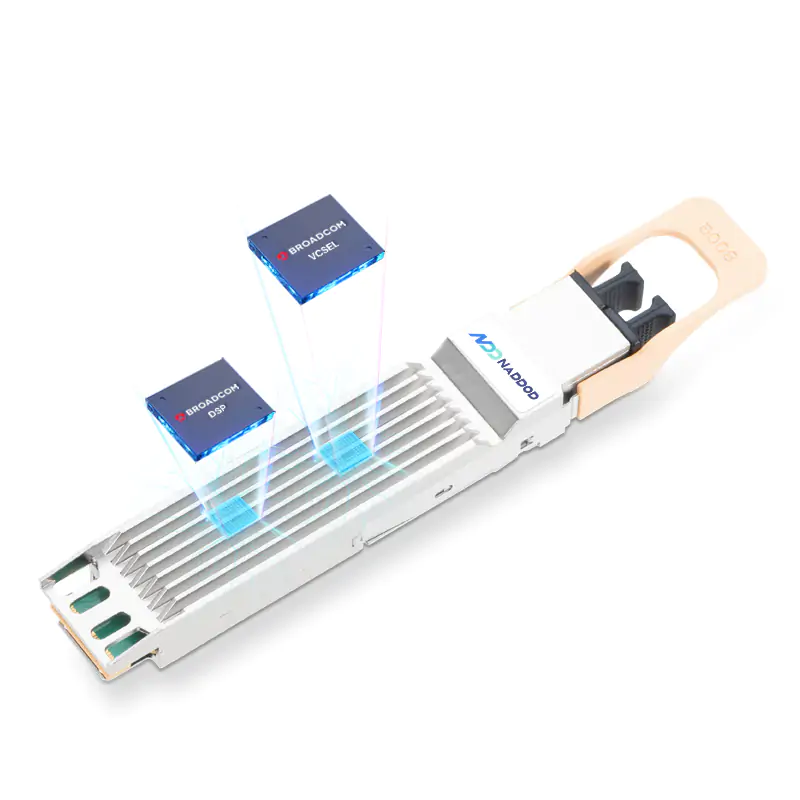

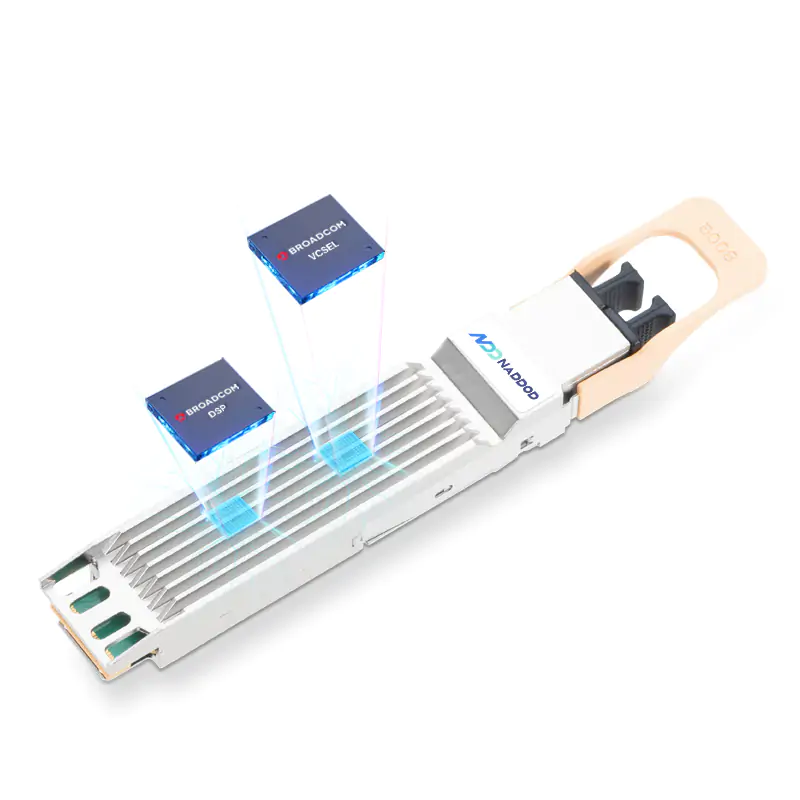

NADDOD 800G InfiniBand NDR modules come in OSFP form factor shape, used over single-mode and multi-mode fiber as a media. The product portfolio comprehends 800G 2×SR4, 2×DR4 and 2×FR4. Interconnection distances range from 30m to 2km. NADDOD 800G 2×SR4 are embedded with Broadcom VCSEL and Broadcom DSP for delivering high-quality signal transmission. NADDOD 800G 2×DR4 and 2×FR4 can be compatible with low-cost DAC by adjusting the layout of the data center and providing unparalleled bandwidth and efficiency.

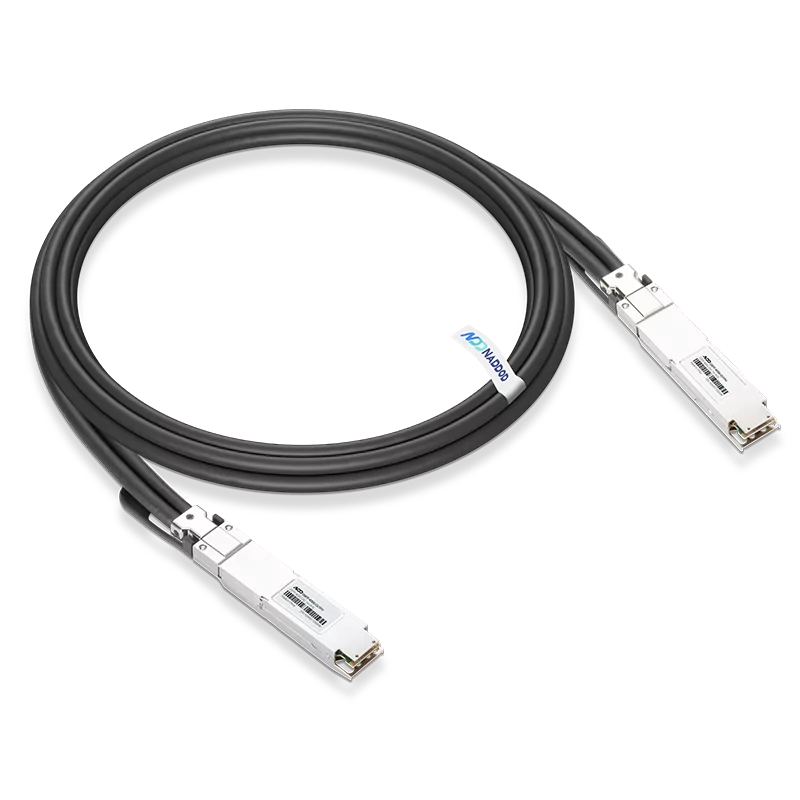

NADDOD 800G Twin-Port OSFP to OSFP InfiniBand NDR Direct Attach Cables (DAC) feature an advanced twinax construction with eight high-speed electrical copper pairs, enabling an aggregate bandwidth of 800Gbps. These 800G DAC cables deliver high-performance, low-latency connectivity for short-distance transmission in HPC and AI data center environments. NADDOD 800G OSFP DAC cables are available in both passive and active copper cables, and are fully tested on NVIDIA QM9700/9790 switches, making them ideal for interconnections between Quantum-2 InfiniBand switches.

NADDOD 800G breakout InfiniBand NDR DAC includes 800G Twin-port 2x400Gb/s OSFP to 2x400Gb/s OSFP InfiniBand NDR passive and active copper splitter cables. It provides connectivity between system units with an 800Gb/s OSFP port on one side and two 400Gb/s OSFP ports on the other. It is a high-quality, cost-effective alternative to fiber optics in 800Gb/s to 400Gb/s applications for high-speed, efficient, and sustainable network connectivity.

NADDOD 800G breakout InfiniBand NDR DAC comprehends Twin-port 2x400G OSFP to 4x200Gb/s OSFP InfiniBand NDR passive and active copper splitter cables. It provides connectivity between system units with an 800Gb/s OSFP port on one side and four 200Gb/s OSFP ports on the other. It is designed to provide ultra-low latency, ensuring real-time performance for the most demanding applications.

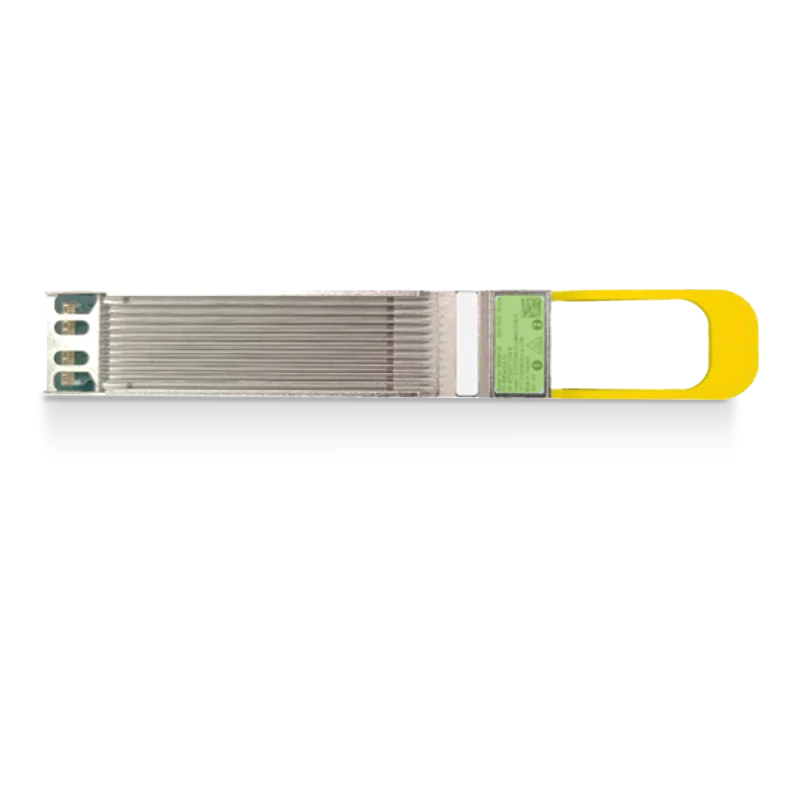

NADDOD 400G InfiniBand NDR modules come in OSFP, QSFP112 form factor shape for multimode, and single mode fiber as a media. The product portfolio comprehends 400G OSFP/QSFP112 SR4 and 400G OSFP DR4. The transmission distances are from 50 to 100 meters. 400G IB modules are often paired with 800G transceivers, ensuring seamless connectivity and interoperability between 400G and 800G modules by using compatible fiber cables. NADDOD 400G IB transceivers offer higher speeds and greater efficiency for bandwidth-intensive applications.

NADDOD InfiniBand NDR 400G OSFP to OSFP DACケーブルは、400G InfiniBandネットワーキング、AIクラスター、およびハイパフォーマンスコンピューティング(HPC)相互接続向けに設計されており、最大400Gbpsのデータレートをサポートします。このInfiniband 400G DACケーブルは、OSFPポート間の低遅延で電力効率の高い接続を提供します。主に400G NIC間の相互接続に使用され、NVIDIA NDR HCAと完全に互換性があります。

NADDOD 400G breakout InfiniBand NDR direct attached copper cables(DAC) have an 8-channel twin-port OSFP end using a finned top form factor for use in Quantum-2 and Spectrum-4 switch cages. It provides connectivity between system units with an OSFP 400Gb/s connector on one side and two separate QSFP56 200Gb/s connectors on the other. NADDOD breakout DAC can provide lower latency in short-distance transmission, and it is suitable for a variety of application scenarios such as data centers, high-performance computing, and storage networks, especially in environments that require rapid deployment and flexible configuration.

NADDOD's 400G breakout InfiniBand HDR DAC includes 400G OSFP to 4x100Gb/s QSFP56 InfiniBand HDR passive copper splitter cable. It provides connectivity between system units with an OSFP twin-port 2x200Gb/s port on one side and four 100Gb/s QSFP56 ports on the other side, supporting InfiniBand and Ethernet networking.

NADDOD 400G breakout InfiniBand NDR active optical cables(AOC) provide connectivity between system units with an OSFP 400Gb/s connector on one side and two separate QSFP56 200Gb/s connectors on the other, such as a switch and two サーバーs. NADDOD 400G AOC supports high-speed data transmission at rates up to 400Gbps with a smaller size and lightweight to meet the demand for high bandwidth, and cabling in data centers and high-performance computing environments.

NADDOD provides four types of MPO-12 APC female single-mode and multi-mode elite trunk cables. NADDOD IB NDR APC fiber patch cord minimizes reflections at the fiber connection and meets the stringent optical surface requirements of IB NDR transceivers. Paired with NADDOD APC patch cords, NDR transceivers reduce signal distortion and raw physical BER is better than E-8, perfectly compatible with the InfiniBand system. NADDOD provides reliable solutions for high-quality signal transmission.

NADDOD cutting-edge InfiniBand NDR connectivity product line includes 800Gb/s OSFP, 400Gb/s OSFP and QSFP112 transceivers, 800G passive and active direct attached copper cables (DAC), 800G and 400G breakout DAC, 400Gb/s OSFP to 2x QSFP56 breakout NDR MMF active optical cables (AOC) and four typers of fiber cable. 800G/400G NDR cables and transceivers are commonly used for empowering next-generation data centers and high-performance computing environments and offering unparalleled speed and efficiency for the most demanding network applications.

NADDODの200G InfiniBand HDR QSFP56 AOCは、200Gb/s InfiniBand HDRシステムで使用するために設計された、QSFP56 VCSEL(Vertical Cavity Surface-Emitting Laser)ベースのアクティブ光ケーブル(AOC)です。200G AOCは、高いポート密度と構成可能性を提供し、データセンター内のパッシブ銅ケーブルよりもはるかに長いリーチを実現します。厳格な製造テストにより、最高の初期設定、パフォーマンス、耐久性が保証されています。

NADDOD’s 200G OSFP to QSFP56 AOC includes 200Gb/s OSFP to 200Gb/s QSFP56 active optical cables. It provides high-speed connectivity between system units with an OSFP port on one side and a 200Gb/s QSFP56 port on the other side, supporting InfiniBand networking.

NADDODの200G InfiniBand HDR QSFP56 DACは、主に200Gの広帯域リンクを実現します。200GイーサネットレートとInfiniBand HDRをサポートしています。QSFP56からQSFP56への銅線ケーブルによる直接接続ソリューションを提供します。非常に短いリンクに適しており、隣接するラック間で200ギガビットリンクを確立するための手頃な方法を提供します。

NADDODの200G InfiniBand HDR QSFP56 ブレイクアウトAOCは、QSFP56から2x100G QSFP56 ブレイクアウトアクティブ光通信ケーブルで、マルチモードファイバー上で動作します。IEEE 802.3、QSFP56 MSA、SFF-8024、SFF-8679、SFF-8665、SFF-8636、およびInfiniBand HDRに準拠しています。片側に200G QSFP56ポート、もう一方の端に2つの100G QSFP56ポートを接続し、ラック内や隣接ラック間の迅速かつ簡単な接続に適しています。

NADDOD's InfiniBand HDR 200G 2xQSFP56 to 2xQSFP56 active optical splitter cable (AOC) Compatible Mellanox® MFS1S90-HxxxE is a QSFP56 VCSEL-based (Vertical Cavity Surface-Emitting Laser), designed for use in 200Gb/s InfiniBand HDR (High Data Rate) systems. It provides cross-connectivity between a 200G Top of Rack (ToR) switch port configured as 2x100G ports and two 200G spine switch ports also configured as 2x100G ports. This offers a substantial CAPEX saving by reducing the required number of spine ports and cables. For data centers with more than 1600 サーバーs, it will also save the third-layer switches.

NADDODの200G InfiniBand HDR QSFP56 ブレイクアウトケーブル(DAC)は、200Gb/s InfiniBand HDRアプリケーションにおいて、光ファイバーに代わる高速かつ費用対効果の高いソリューションです。片側に200Gb/s HDR QSFP56ポート、もう一方に2つの100Gb/s QSFP56ポートを備えたシステムユニット間の接続を提供します。厳格なケーブル製造テストにより、最高の初期設定、パフォーマンス、耐久性を保証します。

NADDODは、HDRに対応したQSFP56マルチモードおよびシングルモードトランシーバーを、最大100m、2kmまで提供しています。NADDOD 200G IB HDRトランシーバーには、高性能な200Gb/s InfiniBand HDRシステムで使用するために設計された200G QSFP28 SR4およびDR4が含まれています。

InfiniBand HDR接続製品ラインは、200Gb/s QSFP56 IB HDR MMF AOC、アクティブ光スプリッターケーブル、パッシブダイレクトアタッチ銅線ケーブル(DAC)、パッシブ銅線ハイブリッドケーブル、光トランシーバを提供します。200Gb/s QSFP56 InfiniBand HDRケーブルとトランシーバは、InfiniBandネットワークインフラストラクチャ全体で、トップオブラック スイッチ(QM8700/QM8790など)をNVIDIA GPU(A100/H100/A30など)およびCPUサーバー、ストレージネットワークアダプタ(ConnectX-5/6/7 VPIなど)に接続するために、またスイッチ間接続にも一般的に使用されています。モデルレンダリング、人工知能(AI)、深層学習(DL)、NVIDIA OmniverseアプリケーションなどのGPUアクセラレーションによるハイパフォーマンスコンピューティング(HPC)クラスターアプリケーションにおいて、InfiniBand HDRネットワークで最大50%のコスト削減を実現します。

NADDODの100G InfiniBand EDR QSFP28 AOCは、QSFP28コネクタを備えたアクティブ光ファイバーアセンブリで、マルチモードファイバー(MMF)上で動作します。このAOCは、QSFP28 MSAおよびRoHS-6規格に準拠しています。個別の光トランシーバーと光パッチケーブルを使用するよりも、費用対効果の高いソリューションを提供します。NADDOD 100G InfiniBand EDR QSFP28 AOCは、ラック内および隣接ラック間の100Gbps接続に適しています。

NADDODの100G InfiniBand EDR QSFP28 DACは、主に100Gの広帯域リンクを実現します。100GイーサネットレートとInfiniBand EDRをサポートし、QSFP28からQSFP28への銅線ケーブルによる直接接続ソリューションを提供します。非常に短いリンクに適しており、隣接するラック間で100ギガビットリンクを確立するための手頃な方法を提供します。

NADDOD 100G InfiniBand EDRモジュールは、QSFP28フォームファクタで提供され、シングルモードおよびマルチモードファイバーをメディアとして使用します。製品ポートフォリオには、100G QSFP28 SR4、PSM4、LR4、およびCWDM4が含まれます。100G QSFP28光モジュールは、70メートルから10キロメートルの距離伝送をサポートし、低遅延で高スループットの通信を提供します。幅広いお客様にご利用いただいております。

InfiniBand EDRは、InfiniBand 200Gbase QSFP28 to QSFP28 EDR AOC、EDR DAC、EDR トランシーバーを含み、Mellanox EDR 100Gb スイッチSB7800/SB7890との接続に最適です。HPC、クラウド、モデルレンダリング、ストレージ、NVIDIA OmniverseアプリケーションをInfiniBand 100Gbネットワークで実行する際に、高性能接続のためにGPUアクセラレーションコンピューティングを50%節約します。

NVIDIA MMS4X00-NSとMMA4Z00-NSは、AIDC向けの高性能、低遅延、低BERを備えたInfiniBandトランシーバーに含まれています。NADDODは、NVシステムと100%互換性があり、非常に費用対効果の高いInfiniBandトランシーバーを提供しています。 800Gトランシーバーをクリックしてください。

NVIDIA InfiniBand linkX InfiniBandトランシーバーは、MMS4X00-NSとMMA4Z00-NSで構成されており、最高のROIと最低のTCOでデータセンターにおける50m、100mの高品質データ伝送に適しています。NADDODは、NVシステムと100%互換性があり、非常に費用対効果の高いNDR製品を提供しています。 800Gトランシーバーをクリックしてください。

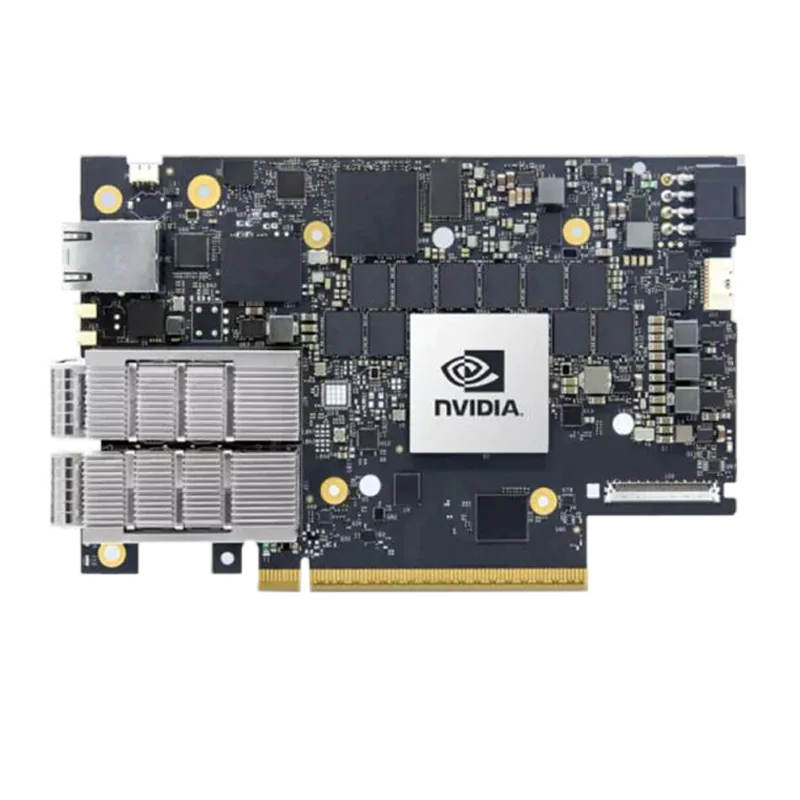

NVIDIA ConnectX-8 SuperNICs includes ConnectX-8 800G options C8180 (900-9X81E-00EX-DT0), C8180 (900-9X81E-00EX-ST0), ConnectX-8 400G SuperNIC C8240 (900-9X81Q-00CN-ST0), and the companion PCIe Auxiliary Card Kit C8180X (930-9XAX6-0025-000) — designed for pairing with the C8180 (900-9X81E-00EX-ST0) and C8240 (900-9X81Q-00CN-ST0) adapters. These products enable advanced routing and telemetry-based congestion control capabilities, delivering maximum network performance and peak AI workload efficiency, while supporting both InfiniBand and Ethernet networking.

The ConnectX-7 smart host channel adapter (HCA) offers ultra-low latency, 400Gb/s throughput, and advanced NVIDIA In-Network Computing acceleration engines for enhanced performance. It provides the scalability and robust features essential for supercomputers, artificial intelligence, and hyperscale cloud data centers.

The ConnectX-6 smart host channel adapter (HCA) utilizes the NVIDIA Quantum InfiniBand architecture to deliver exceptional performance and NVIDIA In-Network Computing acceleration engines, enhancing efficiency in HPC, artificial intelligence, cloud, hyperscale, and storage environments.

NVIDIA® ConnectX® InfiniBand smart adapters achieve outstanding performance and scalability through faster speeds and innovative In-Network Computing. NVIDIA ConnectX effectively lowers operational costs, boosting ROI for high-performance computing (HPC), machine learning (ML), advanced storage, clustered databases, and low-latency embedded I/O applications.

The NVIDIA Quantum-X800 Q3200-RA, Q3400-RA switch with its high performance for AI workloads. Q3400-RA Leverags 200Gb/s-per-lane serializer/deserializer (SerDes) technology significantly enhances network performance and bandwidth. NADDOD SiPh-based OSFP-1.6T-2xDR4H modules typically connect with Q3400-RA for delivering high bandwidth and low power consumption in hyperscale data centers. The NVIDIA Quantum-X800 Q3400-RA features 144 ports at 800Gb/s distributed across 72 Octal Small Form-factor Pluggable (OSFP) cages with ultra-low latency and high bandwidth for next-gen AI.

The NVIDIA Quantum-2-based QM9700 and QM9790 switch systems deliver an unprecedented 64 ports of NDR 400Gb/s InfiniBand per port in a 1U standard chassis design. A single switch carries an aggregated bidirectional throughput of 51.2 terabits per second (Tb/s), with a landmark of more than 66.5 billion packets per second (BPPS) capacity.

The NVIDIA InfiniBand QM8700 Series delivers high-performance networking solutions with low latency and exceptional scalability. Designed for supercomputing and data center environments, this series enhances connectivity and efficiency for demanding applications, ensuring reliable performance in modern computing landscapes.

NVIDIA's InfiniBand Switching solutions are designed to optimize high-performance computing and cloud-native environments. With features like adaptive routing, self-healing capabilities, and enhanced quality of service (QoS), these solutions ensure reliable, scalable connectivity for supercomputing applications, driving efficiency and performance in your data center.

The BlueField-3 DPU is a cloud infrastructure processor that empowers organizations to build software-defined, hardware-accelerated data centers from the cloud to the edge. BlueField-3 DPUs offload, accelerate, and isolate software- defined networking, storage, security, and management functions, significantly enhancing data center performance, efficiency, and security.

The BlueField-3 SuperNIC is a novel class of network accelerator that’s purpose-built for supercharging hyperscale AI workloads. For modern AI clouds, the BlueField-3 SuperNIC enables secure multi-tenancy while ensuring deterministic performance and performance isolation between tenant jobs.

The NVIDIA DPUs and SuperNICs provide specialized hardware accelerators for modern data centers. BlueField DPUs handle infrastructure offload tasks like networking, storage, and security, freeing up critical CPU resources. SuperNICs deliver ultra-low latency connectivity optimized for large-scale AI and HPC environments.