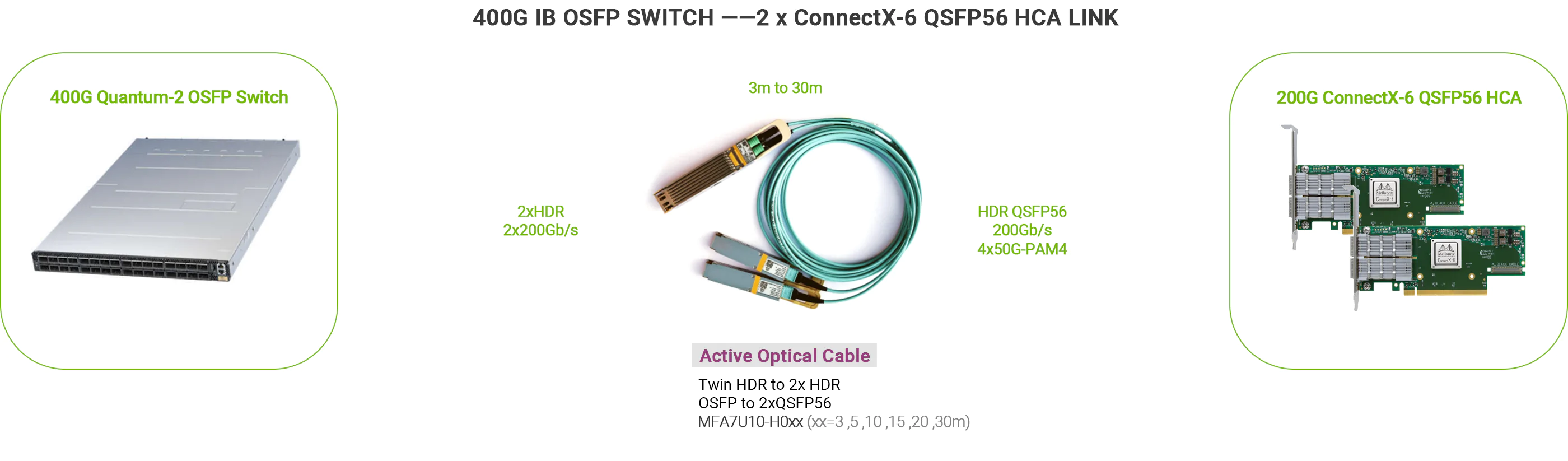

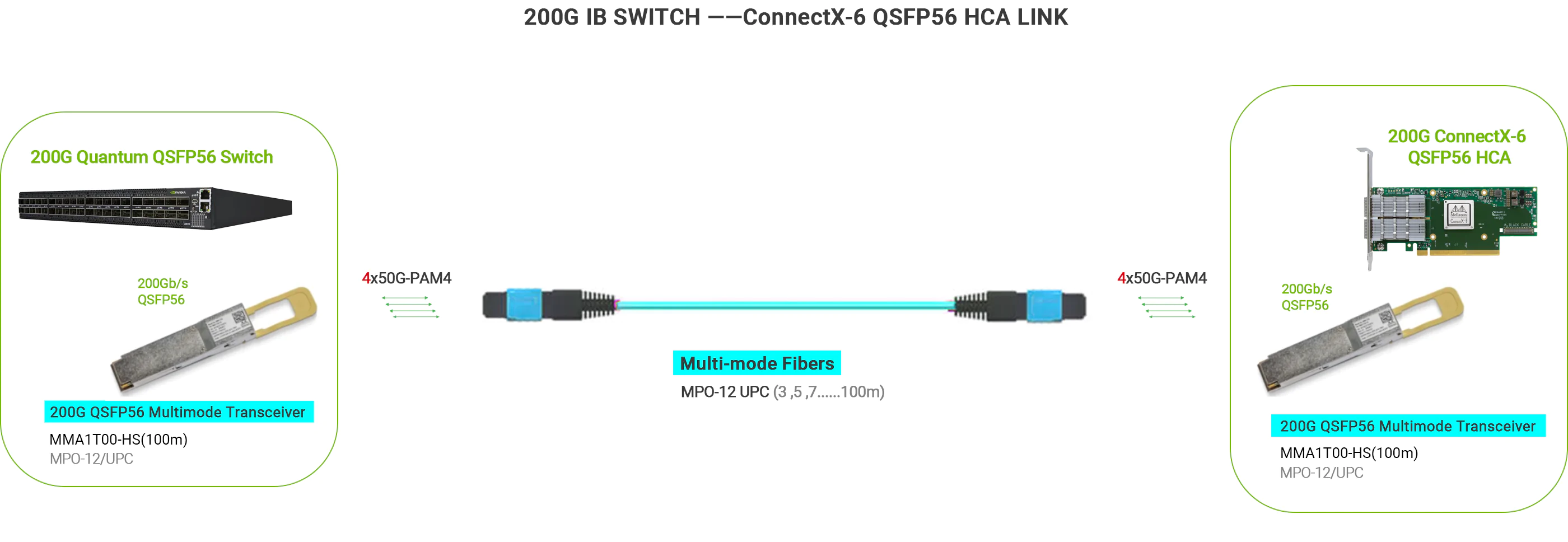

GPU Direct allows for direct data transfers from one GPU memory to another GPU memory, enabling direct remote access between GPU memories. This greatly enhances the efficiency of GPU cluster operations, offering significant improvements in both bandwidth and latency.

Preciso de ajuda?

Contato de vendas

Preencha as informações para que possamos entrar em contato com você.

Bate-papo ao vivoSeg - Sex | Atendimento ao Cliente 24h

sales@naddod.com

+(65) 6018 4212 Cingapura

+1 (888) 998 7997 Estados Unidos

+1 (855) 706 2447 Canadá

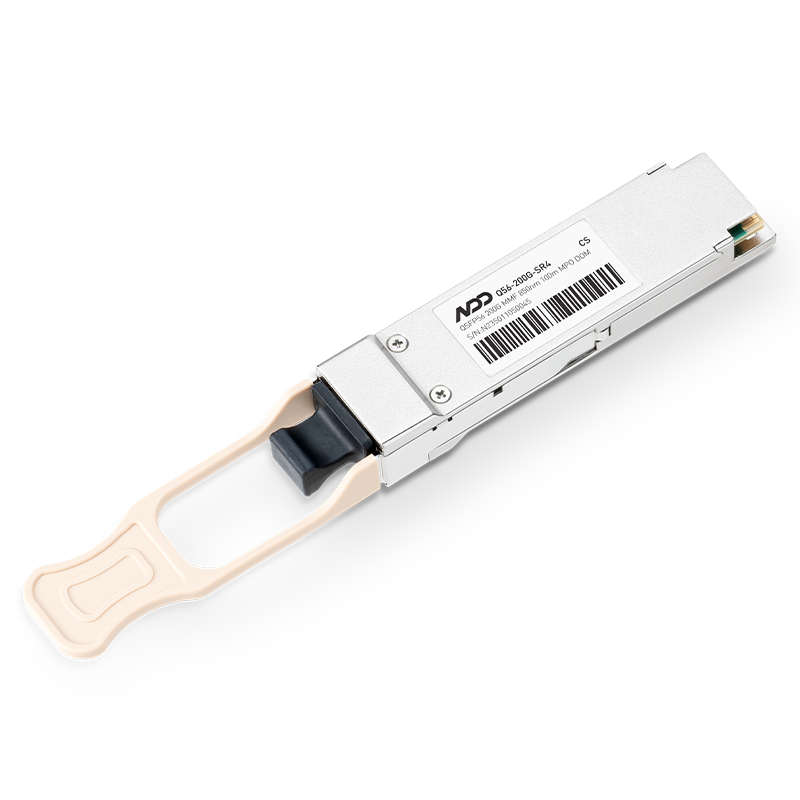

200G QSFP56 to QSFP56 AOC In Stock

200G QSFP56 AOC Hot

200G QSFP56 DAC Hot